Three Reasons to Build a Security Data Lake

Several years after the initial hype fizzled out, security data lakes are making a big (data) comeback. Here are three reasons to start building a data lake into your security program.

1. You’ll have one place for all your data, forever

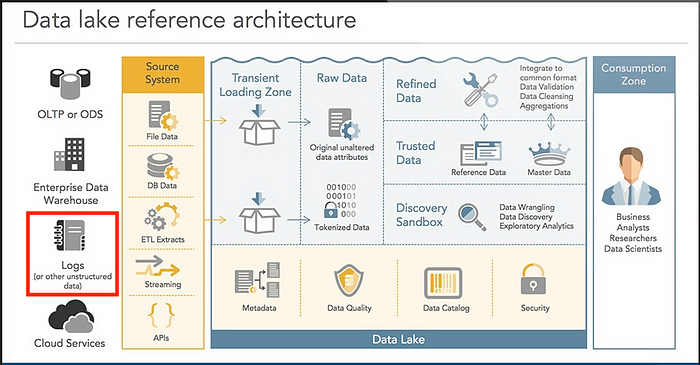

Traditional log management architectures are index-based, requiring a heavy upfront investment in parsing and enrichment. Data lakes are different because the structure of the logs does not have to be defined when data is captured. The data lake approach is to collect everything as-is and apply different analytics based on the questions you’ll need to answer in the future.

In Snowflake, this would be implemented by collecting log data as a JSON blob into a VARIANT column of a landing table. Later, a SQL view can be created that allows selecting key attributes from the JSON as columns. That new view can also combine datasets for enrichment. For example, a log event that was generated with only a numeric account ID can be joined with a separate inventory table to get the human-readable account alias.

This approach is especially helpful for cloud-centric companies where much of the infrastructure is spread across dozens of SaaS and PaaS solutions, each with its own log schema. For example, when you’re ready to correlate between your Workday and Salesforce records then create a view that normalizes the relevant fields for analysis.

The economics of cloud-native data lakes are also worth reviewing given the exponential increase in generated log volume. In Snowflake, for example, customers can store data at $23 per terabyte per month. That price is for compressed data and logs usually compress at least 3x. At that cost, data storage becomes a rounding error in the security budget.

Cost savings aside, cheap storage enables collecting more verbose logs and datasets such as flow logs that are prohibitively expensive for SIEM solutions. Security teams with a data lake should consider their retention period to be “forever”. Your future IR analysts will thank you.

2. Data lakes got easy

The first generation of security data lakes were built on Hadoop. The excitement was based on Hadoop’s fit with enterprise machine data: large and non-relational. The problem was that Hadoop clusters proved operationally complex and analytically challenging. Their potential for security analytics was never realized because of technological drawbacks.

Fast forward five years and data lake technology is now a priority for the major cloud vendors (and Snowflake). Technological advances and the resurgence of SQL are making it much easier to get the benefits of self-service analytics on semi-structured big data without the drawbacks of Hadoop and its brethren.

You can read success stories about organizations using the new generation of data lake solutions. These solutions are seeing rapid adoption in every industry and as a result, most security teams have an opportunity to piggyback on existing enterprise data lake projects. That means less overhead and better ROI for forward-looking CISOs developing their cloud-first security strategy.

3. You’ll be ready for data science

Speaking of forward-looking security pros, you’re probably asking what will be done with all these terabytes of collected data. Consider the potential that data science holds for any application where there’s lots of information and a need for insights and predictions.

As enterprises mature their data operations, they extend analytics to every department from Finance to HR to IT. There’s no expectation that each department will hire its own set of data scientists. Instead, there’s a collaborative model where domain experts work with data scientists to define problems and eventually arrive at self-service BI dashboards and analytics-powered automation.

Infosec organizations that build a security data lake within their enterprise data lake will be prepared for the data science transformation that is coming to their company next month or next year.